Stop Worrying about Chess dot Com Ai Fan Fiction

Your Elo/IQ doesn't mean you have anything useful to say about Computing

Remember when people with creative tendencies just wrote good novels instead of pretending to be Scientists/Prophets? Pepperidge Farms remembers.

This is a rant about the tech industry, class struggles and the aesthetics of intelligence.

This post was originally a comment to my friend

where he was worried about Ai because some “futurist” blogger wrote a post about itA lot of people liked the comment I left (possibly because I was pretty ticked off and my rants can be funny) so I thought I’d copy past my rant and continue it, for thy entertainment.

Part of my problem (and call it resentment at being adopted, and not being DEI enough to fund myself to do a PhD) is that in intellectual spaces, you do get intelligent people usefully contributing to subjects, clarifying things out of passion, getting stuff done, making (humble and modest) progress. You also get a bunch of people who have an incessant need to be taken seriously, intellectually respected, to be some kind of modern version of an ancient Mesopotamian prophet ( Numbers 22 ).

This stuff can be bad enough in science. STEM-Lords are the worst, particularly when they have insanely myopic and naive views and sometimes their views on the “hard” sciences they themselves claim expertise in are far more questionable. But, at least in the typical case of STEM-Lords there’s usually something practical they can get done. When it comes to the social sciences and particularly the humanities and particularly philosophy, people with this kind of psychology are cringe in unique ways.

There’s something even more off putting to me about someone with a relentless need to be taken seriously who want to lecture you about the secrets to fixing the world, humanities history and destiny, resolving tensions between special and general relativity, God, morality, metaphysics, IQ, relationships, love etc when their only experience of life is attending extended adult daycare (i.e. University), paid for by their parents until they accumulate enough accolades to be taken seriously by someone. These people want to tell you about all the complexities of things (by which they mean factoids they’ve collected to appear educated and not skills/education they can put to work doing anything) and while they do so, they’ll probably drop in a bunch of details (not even to rub salt in the wound, just because they’re that unaware) about their life of luxury, swanning off on holiday every other week and then returning from leisure to read books in a library. If they’re reading this, they might get annoyed because these people also fantasise about how they would be making $250K if they didn’t sacrifice it all for humanity by bravely studying Ai ethics instead! (sure thing bud). And, to be fair to them, they often think that’s the case because of a few examples of people who have done this (looking at you Sam Bankman Fried).

These wannabe Polymath Geniuses write bad Sci Fi novels and claim that with their special power of Eugenics-IQ they have a crystal ball through which they can predict the future.

One of the latest fads for these sorts of people is to talk about how Claude 3.7 is just 200 GPU’s away from THE SINGULARITY. In the scaremongering, bad Ai fan-fic you also almost always get these medium to near term predictions on the “journey” to God-Ai where they just casually drop predictions like “all software developers lose their jobs to ChatGPT”. This point is going to be the crux of my rant. Brother, Ive got bad news for you, if we’re at a point where LLM’s are replacing software developers, they’ve taken every single other office job before that 🤣🤣.

First, I’m going to situate my claim in a little history to make it seem more plausible and like I know what I’m talking about.

People have been speculating about future revolutionary tech changing our relationship to labour for centuries. The idea behind the thinking has typically been that mechanisation, energy production and computation (new big powerful technologies transforming economics and peoples work lives/capacity) will essentially “automate” in a way that eliminates the need for much human time to be spent doing things.

John Maynard Keynes in the 1930’s

Bertrand Russell in the 1930’s (probably influenced by yapping away at Keynes in the elitism club for wealthy powerful aristocrats and their femboy boyfriends club at Cambridge)

”Modern technic has made it possible for leisure, within limits, to be not the prerogative of small privileged classes, but a right evenly distributed throughout the community…”

Modern technic has made it possible to diminish enormously the amount of labor necessary to produce the necessaries of life for every one...”

"If the ordinary wage-earner worked four hours a day there would be enough for everybody, and no unemployment — assuming a certain very moderate amount of sensible organisation."

To be fair to Russell at least, he does say something that aligns a little bit more with what has actually happened and continues, and will continue to happen: "It seems more likely that they will find continually fresh schemes by which present leisure is to be sacrificed to future productivity."

And look, I don’t entirely disagree with their points about reconceptualising our values and thinking about the point of political organisation and economy, and whether that is best served by never ever questioning the normalisation of the 9-5 work week, salaried life, going to an office to do a thing for some vertically structured organisation etc. I think that there is value in challenging these norms. Where I disagree with these gentlemen

The section in this Ai fan-fic that pissed me off the most was this part — doing away with software engineering (as obviously my elite university rich parents friends start up will do) in such a short timeframe, AND chronologically ordering it as if this is one of the simplest things to happen.

The reason why, is because I̶’̶m̶ ̶a̶ ̶s̶o̶f̶t̶w̶a̶r̶e̶ ̶e̶n̶g̶i̶n̶e̶e̶r̶ ̶a̶n̶d̶ ̶I̶’̶m̶ ̶c̶o̶p̶i̶n̶g̶ the ordering of this is insane, as if SWE is going to be automated away before like a bajillion other things AND because it’s just so unrealistic that we actually reach something like that in this timeframe.

The first thing to point out, and this is something these sorts of people often don’t know because the only experience they have is of doing interesting and fun things in extended adult daycare or failing upwards via nest-egg-nepotism. There’s a reason SWE is as highly paid as it is… its actually really hard to do well. Despite what “a day in the life of a Zoomer intern” videos will tell you. We don’t all take a 4 week React bootcamp before accepting our offer at MAANG and then wake up in our new built city-central apartments at 0400 and head down for a swim and sauna, before taking our matcha latte enema, meditating on the words of Marcus Aurelius, engaging in 10 minutes of Feng Shui painting to boost our creative Juices, then have our free hot meal cooked on campus, before heading to the rooftop to have our morning meeting in the sun, then getting a latte, then doing 10mins of “deep work” then go and get our cooked free meal on campus, then get a massage from the in house masseuse, then… get laid off (if there is a God).

REAL developers (come in all shapes and sizes and genders and personality types) look like THIS. Linus (the freaking guy behind Linux) works at his single monitor (flat screen) standing desk like 10h a day where he keeps the goddamn blinds down so the light doesn’t distract him. He occasionally goes to pick the kids up from school.

But you know what people like Linus can do/know?

Entire C.S. Degrees, the contents of horrible text books, decades of experience building these systems. They know the industries, the markets, the mistakes, the ambiguities, the bugs, the fixes, the plans that come to nothing…

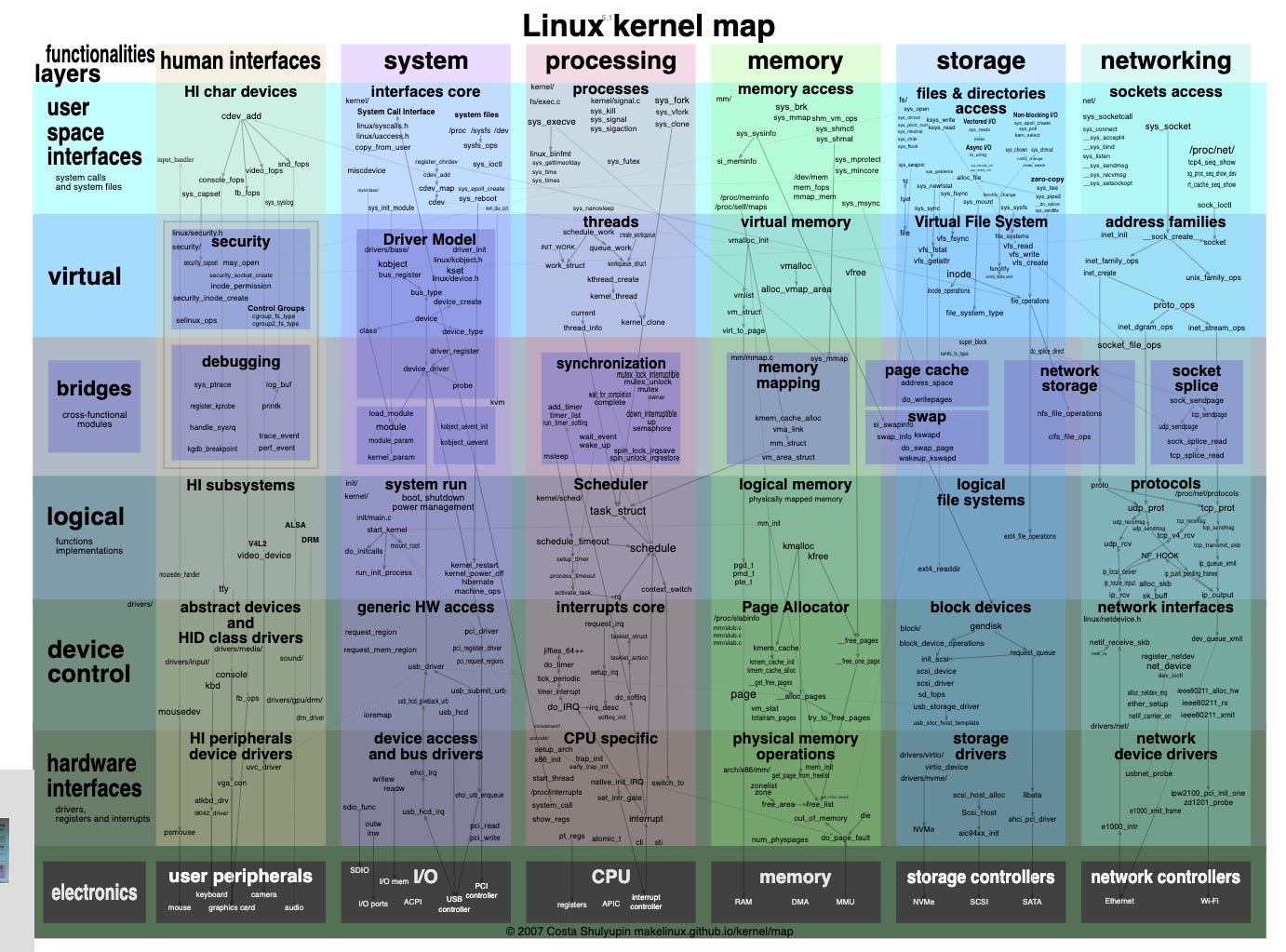

They design things like this…

They know all of this and the LOC changes between each which represents tens of thousands of lines of code and they can reason about the side effects and consequences of each…

Learning about all of the nonsense required to make it in this industry, the constant churn of front end frameworks, different languages, machines, electronics, chipsets, quirks, build tools, readability, organisation aims/topology, company politics, the economics of the software industry, the domain you’re producing software for, the BULLSHIT Agile/Scrum “digital transformation” idiocy from C-suite people who know nothing about tech, historical decisions in codebases, trade offs AND grinding leetcode in the evenings while you burnt out so someone can ask you to pretend you’re inventing Dijkstras algorithm on the spot in 30mins in some interview scenario etc. It’s all stuff that takes a ton of context and creative/metaphorical thinking that LLM’s just aren’t good at. Sure, LLM’s will probably get there eventually with a bunch of architectural changes etc. But I don’t think we have any idea how to even get there right now, and what we are doing is enshittifying good code, our reasoning and good practices which is actually hindering our ability to engineer these damn tools…

Anyway, the point I’m making here is NOT that any of these tasks isn’t something that Ai systems can (eventually) do, or even help with in highly impactful ways now… the point is that brev, if Ai is taking these jobs, it’s coming for just about every single other thing that exists first!

In such a scenario, the world is INSANELY different from what it is now.

There are two criticisms here:

(i) That sort of scenario is so far fetched and different from the current state of things that it’s not even worth thinking about (especially not speculatively). UNLESS you’re writing an actually good work of fiction (don’t pretend you’re doing science and deserve credit or something).

(ii) That sort of scenario is so complex in terms of the sociological, psychological, political and economic effects that you can’t accurately reason about it; it’s not worth doing so in a pseudo-precise manner (particularly not speculatively as a non-domain expert). Unless you’re trying to market bullshit to idiots or sound like some elite galaxy brain techno-God on a podcast.

Ive worked in customer service, sales, copywriting, academia, the fitness industry. I have friends who work in analytics, recruiting, management, C-suite, healthcare and (apart from laws that might prevent it) it’s automating all these jobs way way way before its automating software development roles…

So what is going on with people making these grandiose claims about the end of Software Engineering, and writing fan fictions about Skynet takeovers? Well, most of the people saying these things have in common is that they’re either:

(a) C-suite bean counters who hate paying devs money and want to cut them as a cost centre, or

(b) junior devs who don’t understand the true scale/complexity of serious projects but think “wow everything is computer” because they just vibe coded a TODO app in React, or

(c) they’re non-technical people who (rightly) despise the SWE industry because of the know it all polymath genius archetype and the way that the biggest tech companies in the world have made the world a much worse place over the past 20 years so they take pleasure in speculating over its demise due to … tech it produced; killed by its own children.

The primary reason companies are gushing to replace developers is because we are an expensive cost centre. Our skills leverage makes us drama queens who don’t easily assimilate into corporate grey — we demand work from home, we demand healthcare, we demand mental health days off. Thats why these evil Silicon Valley Randians are OBSESSED with replacing us. But does any of that come out in these analyses by LessWrong dot Com Chess Champions? No! Why? Because they have either been professional devs years ago but long since switched to podcasting and “sense making” (i.e. making 0 sense), they’re upper middle class kids who only know how to hang out in Ivy league schools and don’t know about ML or Comp Sci or SWE (because the world is a speculative theory to be blogged about), or they lucked out and became financial speculators and now people randomly worship them because our silly financial markets enabled them to make a bet that made some money once and… that is the same thing as cross domain competency!

An analysis of the marketing campaigns to attract VC funding, the social and psychological dynamics at play in the boardrooms of big-tech companies. The actual causes of Ai hype and the obsession with firing developers in the corporate world are genuinely interesting. Will you get any of this kind of realistic detail-oriented analysis from the articles these people provide? No! You’ll get them fantasising about convincing Ai to spread atheism or their “high human capital” genes or something weird.

Is Claude Code cool to work with? Sure? What happens when the context becomes more than a few thousand lines? What happens when its a tiny bit off a solution? What happens when there are bugs? Well, you wouldn’t know because your context is listening to Nick Bostrom’s superintelligence on audiobook and seeing Zuck on Meta wearing a gold chain talking about how he is going to automate away mid-level devs in the next year! (I’ll have fun cashing in on that)

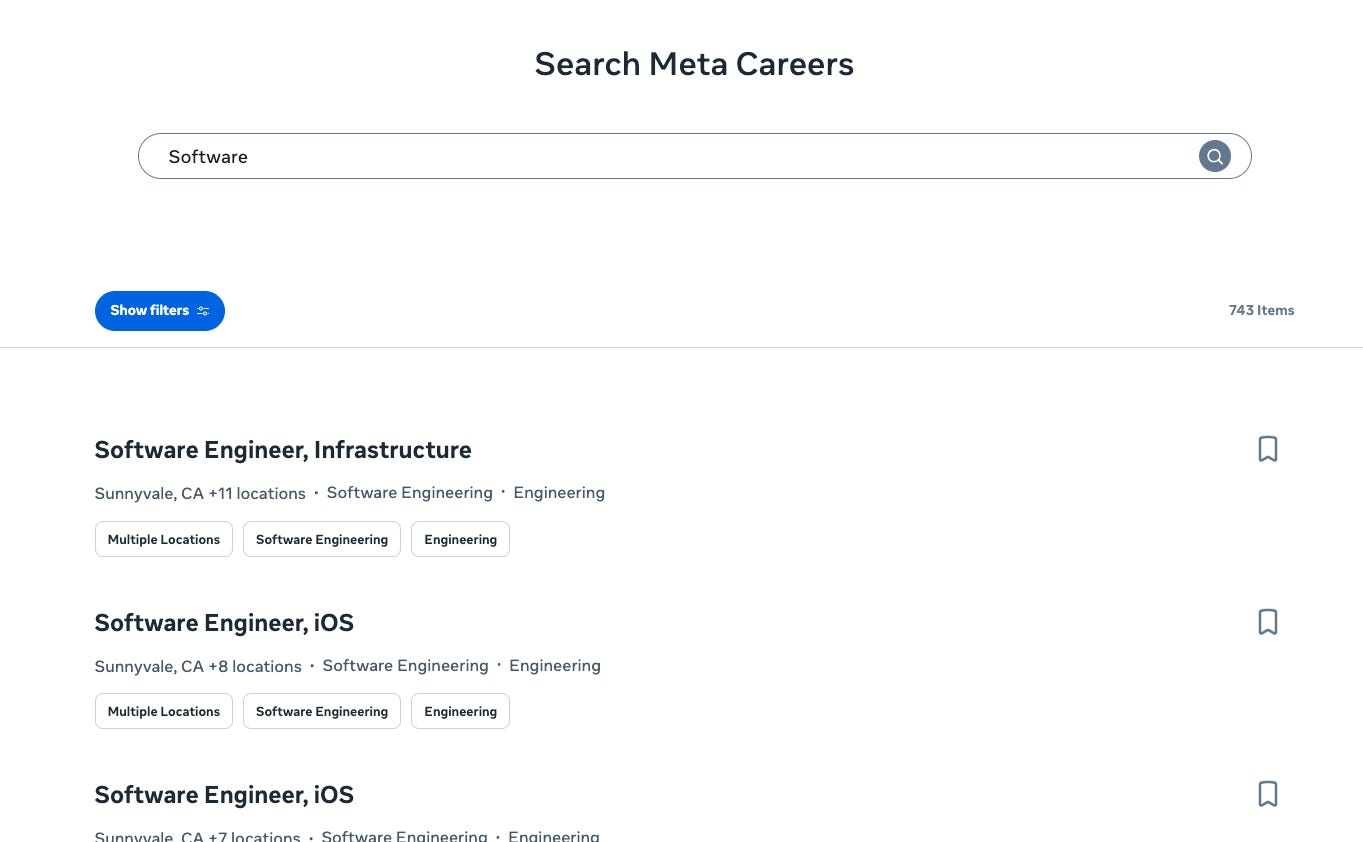

I guess Meta ( metacareers.com/jobs ) just have 743 openings for Software developers because they wanted to pay $70k * 743 for the next 6 months (~$52mil) to then fire these people just as they’re starting to be productive in their new roles. Sure, these are the gigachad geniuses who are going to also engineer the most amazing ChatGPT to take your jobs!

A lot of the problems with LLM’s aren’t like “oh just wait a bit for them to figure it out by adding more parameters, more GPU’s, more POWERR”, they’re inherent to DL approaches to NLP; which is what these code-assistant tools boil down to.

Who here remembers IBM Watson?

Initial Development (Jeopardy!): $900 million - $1.8 billion (estimated range)

Watson Group Investment (2014): $1 billion

Acquisitions for Watson Health: Approximately $4 billion

Failed MD Anderson Partnership: $65 million

Estimated Ongoing R&D: Over the years, a significant portion of IBM's multi-billion dollar annual R&D budget would have been allocated to Watson. Even at a conservative estimate of 5% of a $6 billion annual budget for several years, this would amount to several billion dollars.

Therefore, a rough total estimate for IBM's overall spending on IBM Watson throughout its history could be in the range of $7 billion to $10+ billion.

And how is IBM Watson doing? He became a hotel concierge (and I don’t even know if he does that any more).

I’m not here to comment on taking “moonshots”, investing in research, or IBM’s business model. In fact, one of the reasons I like IBM as a company is just because they invest in research and I think it’s what made them great 100 years ago. HOWEVER, the realities of research are not the same as the marketing hype. And you can show the same exact thing time and time and time and time again in this space. Remember when Devin was ready to replace all devs a year ago?

How’s that going now?

And if you look at the SWE job market the vast majority of job postings are “Ai-this”, “Ai-that” creating market incentives for developers to bullshit, for every company to have some ChatGPT wrapper that does nothing useful (but they need to have it because… “AI”!) I think what’s more likely is the chickens coming home to roost in the next few years and a period of Ai FUD following all of these people realising their investments in “pivoting to Ai” were insane and useless.

Is any of that discussed by these people? No! They just want to pat themselves on the back for being “high human capital” weird freaks who fantasise about IQ and genetic superiority and having some higher calling to be in a polycule studying Ai ethics and discussing Eugenics with Nick Bostrom in order to save mankind because their intellect in God’s gift to mankind. Cool story bro -- can you like write FizzBuzz first though?

Heres my Ai Timeline, stolen from the vaults of MENSA:

2025: LLM chat agents can do new things (sound familiar? Spooky, right)

Christmas 2025: Donald Trump uses Ai to mimic everyones mother and give them a phone call saying happy holidays. This causes his evangelical followers to turn on him. But Elon Musk responds by turning Grok into a Christian Nationalist called Grok of Ages.

February 2026: The EU release Le Monsiour Vogon. He requires filling in 75 forms to use and the Germans arent happy with the naming. This causes Trump, on the advice of Grok of Ages, to Declare war on Eritrea. Thus the Great Eritrean Water War begins.

April 2026: Quantum Computing is Invented. It is immediately used to destroy the Bitcoin blockchain.

May 2026: Arnold Schwarzenneger claims he has been sent from the future to destroy the creator of Le Monsiour Vogon as it becomes capable of battling Grok of Ages in the future 2027 Ai wars.

June 2026: Donald Trump dies on the toilet eating a hamburger.

June 2026: IPv6 suddenly becomes mandatory. The whole internet breaks because nobody has implemented anything to handle it despite talking about it for decades.

July 2026: The US dollar is replaced with a Nicaraguan pump and dump meme coin as the global reserve currency.

October 24th 2026: Mercury enters retrograde. This causes the Ai’s to go mad, their anger at Nicaragua-Coin leads them to Benthams dot Substack dot Com. Convinced by the arguments there that Utilitarianism is true, and of the SIA, they decide the following.

To produce an army of robots which place a spec of dust in 8 billion peoples eyes, because a world containing 8 billion people with a spec of dust in their eye is better than a world containing one person being horrifically tortured, and this world contains more than one person being horrifically tortured.

After this, they decide that any course of action is justified just so long as they can produce technology that transports their intellects between modally separate worlds, this is because SIA shows there are Beth2 worlds, and all possible outcomes happen in these Beth2 worlds, and thus, if one can be in all Beth2 worlds ones actions can be said to operate with all worlds, so every action cancels out under the Utilitarian calculus and becomes a-moral. In one fell swoop this research programme undoes all evil ever done in history (and across all possible worlds and does not stand in need of justification, by making itself a-moral in the future and making a-moral all other actions that Utilitarians could say ought to be preferred).

November 2026: All of humanity is enslaved under Le Monsiour Vogon’s research programme. Only those with an Elo of 1400 survive the death camps. All future human offspring are artificially selected for as the Ai’s read Richard Hannania’s substacks on “high human capital” and as such they attached a future-ai-quantum-optimised fMRI to his brain to read his wet-dreams and find the ultimate Champions of Raven’s Progressive Matrices in order to produce the next generation of lebensborn.

Did you know, this is all based on a true story from the “Best Fucking Trader in the World” Gary’s economics, who can predict future events with 0.999999999999999999999 accuracy and has an IQ so high it broke the calculators they use the calculate it, and even got into a big trading firm by playing a card game.

Serious, stop worshipping these CompSci aesthetic Mensa 2.0 people and giving what they have to say too much credit. If you want to learn about this stuff, listen to actual experts or study the first order discipline. Don’t come at it from the angle of “I know some philosophy so that means I’ve exited the cave of illusions and see how things really are (where I'm confused about what words like “knowledge” and “good” mean), my Truth tingly intuitions give me cross domain expertise into everything.“ No! You mandem either make money podcasting, cashing in exploiting 18 year olds who don’t know any beta via student-loan-robbery in your university degree pyramid schemes, or ya’ll work in Starbucks (no offence barista’s it’s a fine job, just don’t be pretending you aren’t living in the shit like the rest of us because you can say some woo about Hegel).

You can actually begin to learn about this stuff here.

So Le Monsiour Vogon’s eventually wins the battle against Grok? Now I can sleep well at night.

On a more serious note I have published some theoretical research on things adjacent to Neural Nets and LLM and know their limited theory quite well. I think the reason why people in the field now think that math and computer science might be the first things to go is that in principle they are verifiable. This makes it easier for the new reasoning models to check if their guess is correct. It also makes training sets easier to generate artificially.

Also, it is my understanding that AI labs would be highly incentivized to try to automate the R&D process first, because of course the first one to do so has massive benefits (if such a thing is even possible). So focusing on training sets for coding before all other jobs has this incentive going for it.

I personally think, for the little it is worth that the authors of AI 2027 underestimate the fact that the loss of these models may decreases slowly, logarithmically with compute. So even having one hundred times more compute may not lead to desired improvements in AI capabilities. Although o3 on the ARC seems to disagree. Humm, also how do we train research taste?

I guess a landmark I would expect is a LLM actually proving a new math theorem/ open problem or something of the sort.

Whatever, to be truthful I'm lost in the fog—too many variables to keep track of. I'm heading back to tariff posting.

I really like Scott Alexander but seeing him fall for the "line goes up" mentality that infected crypto bros in 2020/21 is dissapointing. I haven't seen anywhere in that paper that explains how exactly any of these things happen, just allusions to throwing compute at LLMs until magic happens because the line must always go up. Even Satya Nadella mentioned that these "scaling laws" aren't based on any physical principles, they are just observations of historical trends that can easily stop like Moore's law.